Defining Your Organisation

Google Cloud recommend your network resources should be organized hierarchically to enable you to manage access control and permissions across your organization. Grouping together all the network resources that each team or project requires makes it easier to permit or deny access according to which business unit each team member belongs.

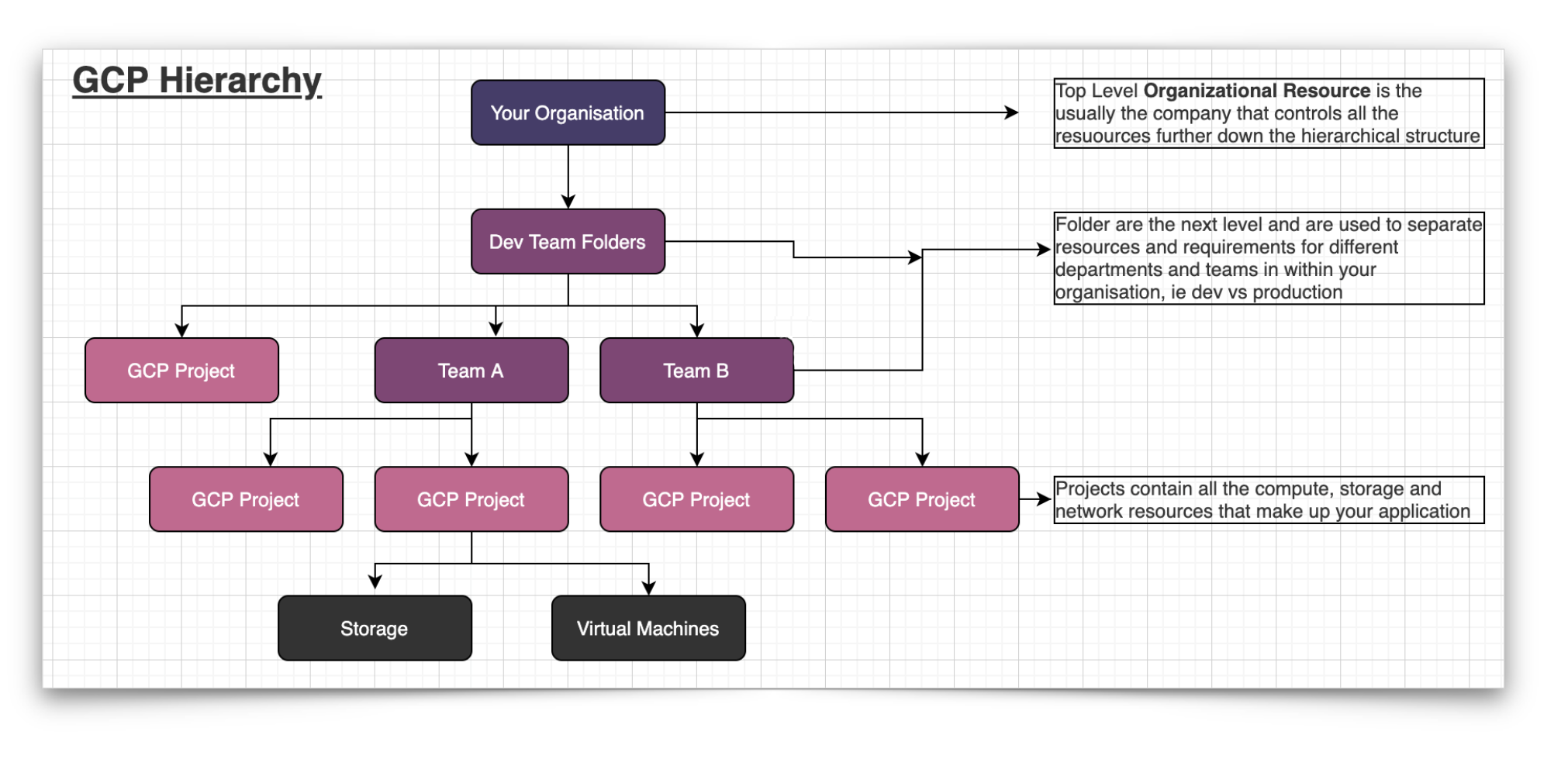

A typical organizational hierarchy might look something like this.

If you are just starting out on your Google Cloud Platform journey, then adopting the simplest structure is advisable. While it is not mandatory for Google Cloud users to have an organization resource set up, there are some features of the GCP resource manager that will not be useable without one.

Organization Nodes

The Organization resource is closely associated with a Google Workspace or a Cloud Identity account in so far as creating a GCP Project using either entity will automatically provision and Organization resource.

A Workspace or cloud identity can have just one organization provisioned with it. After the organization is created for an account domain, all subsequent projects created by members of the account will by default belong to that organization. It is not possible to create a project without associating it with an organization.

The benefit of ownership laying with the organization instead of individual user accounts is that should an individual user leave, the projects they created will persist within the organization once they are removed from your network.

You can create an organizational node that maps to your corporate internet domain via Cloud Identity and also migrate existing projects and billing accounts into the Organizational node.

Folders

The folder resource is another way to group and isolate projects. They are essentially sub organizations under the Organization node. Folders can represent different departments or legal entities in your organization and can be nested, so one you could create on folder for the development department that contains folders for multiple teams.

Within individual team folders, you could then nest multiple folders for each application. With the appropriate permissions in place, you can view the folders from your Google Cloud Console.

Projects

Projects are the lowest level entity for creating and provisioning all GCP services and resources. They consist of two identifiers being the project ID and the project number and a mutable display name. Projects have a lifecycle status eg: active or delete_requested, labels and a creation timestamp.

When interacting with most GCP resources, you need to provide the project ID and project number when making requests. The initial IAM policy for newly provisioned project resources grants the creator of the project ownership.

Google recommend automating the creation of projects using tools like Cloud Deployment Manager, Terraform, Ansible or Puppet. Infrastructure as code has many benefits including consistency, version control, tagging, deployment artifacts and tightens up naming conventions which makes it easier to refactor projects.

IAM - Identity and Access Management

Access to google cloud is managed using google accounts. You will need to have a google account in order to access Google Cloud. GCP recommend setting up fully managed google accounts tied to your corporate domain using Cloud Identity which is a stand alone Identity as a service solution (IDaaS)

This enables employees to access you cloud projects using their corporate email address and your admins can control access through the admin console.

If you are using an on-prem or third party identity provider, you can sync your user directory with cloud identity to enable your corporate credentials to access Google Cloud.

IAM Identity and Access management is used to grant granular access to specific GCP resources and prevent unwanted access where required.

IAM allows you to create roles with specific permissions and you can then assign those roles to individual users. Google also provides and recommends groups. You assign IAM roles to each group, then when a new user joins your organisation, you add them to a group and they inherit all the role permissions allocated to the group.

Typical groups might include -

- Organization Admins

- Network Admins

- Security Admins

- Billing Admins

- DevOps

- Developers

Network Security

When you are designing a new network Google Cloud strongly recommend mapping out your network using VPCs (Virtual Private Cloud) to group and isolate the resources you will provision. VPCs allow you to set up scalable network infrastructure to hold your Virtual Machine instances and other services like GKE, Dataflow and Dataproc.

VPCs can span multiple regions. VPCs can contain multiple subnets which are set up in individual regions and provide the IP address access to your network and applications.

Firewalls

When you provision a GCP VPC network, a distributed virtual firewall is provided to control traffic into your network. Firewall rules should be used to allow or deny access to GCP resources including Compute virtual machine instances, and Kubernetes clusters. The Stateful nature of the firewall automatically enables return egress traffic for any permitted ingress traffic.

Firewall rules apply to the particular VPC they are configured for and allow you to specify the protocols, ports to allow or deny traffic on, as well as defining permitted source and destination of traffic using IP, subnets, tags and service accounts.

GKE (Google Kubernetes Engine) instances have different considerations in terms of firewall rules and managing traffic flow within the GKE cluster.

Keep external access to a minimum.

Every time you provision a new google cloud resource, you place it in a subnet and it inherits one of the internal IP addresses in the range associated with the subnet. This allows all the resources in the subnet to communicate with each other via internal IP traffic (subject to firewall rules).

To allow external or internet access to resources, they need to have a public IP address or use Cloud NAT. They also require a public IP address to enable communication with resources in other VPCs where no other connection method is present like a VPN

You should only provide public IP addresses to resources in your Google Cloud where absolutely necessary. Private Google Access is also available to connect resources with no public IP.

Shared VPC is another method of allowing separate projects to communicate via internal IP traffic. By designating a project as a host project, then attaching other projects as service projects. All the VPCs in the host project become Shared VPC networks which make the subnets in the host network available to the attached service projects.

Secure your data and applications

All major cloud vendors approach security as a shared responsibility. They will do their utmost to ensure the physical and digital security of the facilities and hardware on which the cloud is built, however it is up to you to ensure the networks and infrastructure you build on top of GCP is secure and robust to ensure your data and applications are kept secure and persistent.

There are a number of services and strategies to help with that.

VPC Service Controls - use these to secure your resources with a VPC

HTTPS Load Balancer - helps you provide high availability and scaling for your public facing applications.

Google Cloud Armor - used in conjunction with load balancers can help mitigate DDoS attacks and controls access to trusted IP addresses at the network edge.

Identity Aware Proxy (IAP) helps control access to your apps by verifying user identity and the context of access requests to assess if a user should be granted access.

Monitoring, Logging and Environment Operations

It's not unusual for an organization to have multiple applications, perpetual CI/CD pipelines and various DevOps activities all happening at the same time. Ensuring a healthy network is a task for both developers and operations staff and having monitoring and logging regimes in place is invaluable in detecting and rectifying problems when they arise.

Google Cloud Logging is a service that allows you to collect, view, search and analyse log data. You can configure alerts based on detected events. Most google cloud services have native logging integration, while compute engine and even AWS EC2 instances can have a logging agent send information back to Cloud Logging.

Google Cloud Monitoring allows you to monitor the performance and health of your applications and infrastructure. Using out of the box or custom metrics, you can monitor your Google cloud ecosystem via dashboards, visualization tools and alerts.

From a governance perspective, it is also a good idea to export and retain your logs for a period of time. These can help with compliance requirements or historical analysis of network activity. You can include or exclude logs from being exported using filters which can be used to exclude high volume logs like load balancers.

Google Cloud Architecture Considerations

GCP provides a lot of managed services that can improve the efficiency of your tech stack. For instance instead of provisioning a VM instance and then self installing and managing MySQL, using a MySQL database provided by Google's Cloud SQL you end up with a database that's managed by Google so you don't have to worry about the underlying infrastructure, backups, updates or replication.

Designing high availability applications on GCP is achieved by leveraging the available zones and load balancing resources. If your network is designed utilising multiple zones within a region, then should a particular zone experience an outage, or a zone gets flooded with DDoS traffic your load balancers will be able route traffic to an alternate network segment so your application persists and any negative performance impact is minimised.

This strategy also holds true for entire regions. Storage can also be designed with high availability in mind. Some Google Cloud data storage options have the ability to replicate data across zones within a region and there are services that will automatically replicate data across multiple regions.

In addition to designing your network for high availability, it is also recommended that you have a disaster recovery plan in place for those rare but real occasions where a major disaster takes out multiple availability zones and/or regions impacting your applications. The recovery plan should be tested and amended as your production environments change. We'll most likely do a detailed blog post on disaster recovery planning shortly.

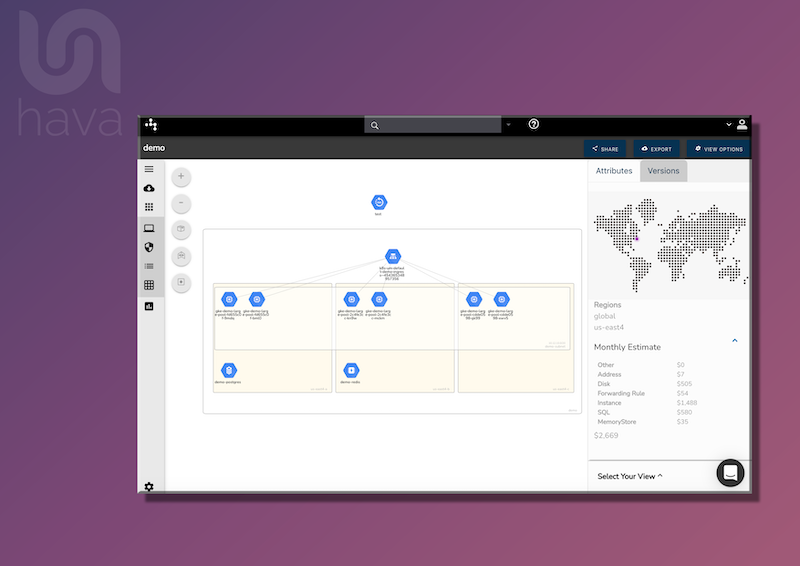

Visualizing Your Google Cloud

With any cloud based network, it is always beneficial to be able to visualize your network in diagram form. This can enable engineers to see what is currently configured and relate it to the concepts discussed above.

- What do your VPCs look like?

- What happens if an availability zone goes offline?

- Is your data replicated?

- Will your https load balancer handle a DDoS attack?

- Do you even load balance?

- Application performance just nose-dived. What changed?

These are the sorts of questions you can easily answer when you connect your GCP account to Hava.

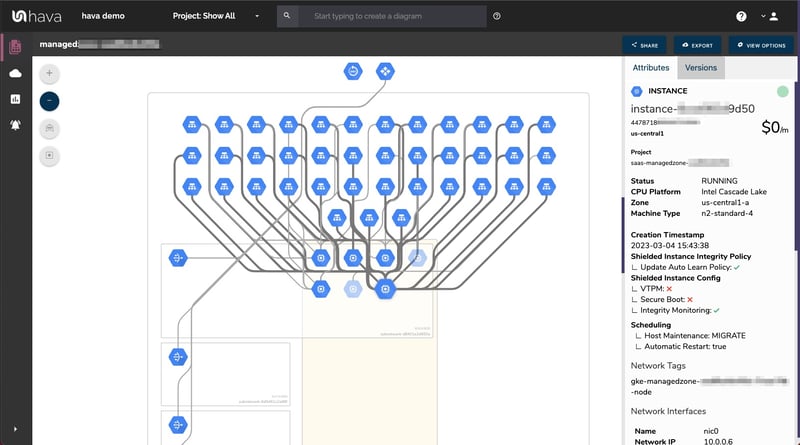

Once connected Hava imports and analyses your GCP environment and creates a diagram set for each discovered VPC. You can instantly see your resources, which subnets they belong to, which zones are being used and in which regions.

This enables you to visually assess what would happen if a region or a zone went offline. You can also interrogate each resource to see all the settings and metadata like public and private IP address directly on the interactive diagram. Simply click on a resource and the attribute pane to the right of the diagram will display all the rich metadata discovered

You can also view connections between resources to assess where your load balancers are sending traffic which should also tell you if your applications would persist if a zone or region experienced an outage.

Hava diagrams are auto generated and continuous monitoring will update and replace your GCP network topology diagrams when changes are detected. Superseded diagrams are placed in a version history. This can be used to answer the 'what changed' question when unexpected events occur without having to manually comb through logs.

You can take a look at Hava for yourself by taking advantage of a free trial. Learn more here:

Also read : Managing Cloud Engineers